Widgetized Section

Go to Admin » Appearance » Widgets » and move Gabfire Widget: Social into that MastheadOverlay zone

Confirmation Bias: Decision Traps in Public Service

The views expressed are those of the author and do not necessarily reflect the views of ASPA as an organization.

By Terry Newell

September 10, 2018

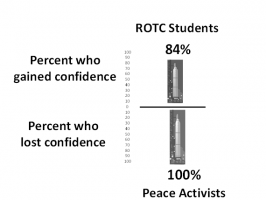

In a decades-old experiment, two groups of undergraduates (ROTC students and peace activists) were given identical accounts of four serious breakdowns concerning nuclear weapons – including a simulation which led to an actual missile launch. They were then asked if they had changed their minds about their confidence in our ability to manage our nuclear arsenal. Eighty percent reported that they did change their minds, but as the diagram shows, eighty-four percent of ROTC students became more confident and 100 percent of peace activists became less confident. The former found evidence in the accounts for their view that we can safely manage our nuclear weapons (after all, no nuclear war started); the latter found evidence that we could not.

In a decades-old experiment, two groups of undergraduates (ROTC students and peace activists) were given identical accounts of four serious breakdowns concerning nuclear weapons – including a simulation which led to an actual missile launch. They were then asked if they had changed their minds about their confidence in our ability to manage our nuclear arsenal. Eighty percent reported that they did change their minds, but as the diagram shows, eighty-four percent of ROTC students became more confident and 100 percent of peace activists became less confident. The former found evidence in the accounts for their view that we can safely manage our nuclear weapons (after all, no nuclear war started); the latter found evidence that we could not.

Confirmation bias—the tendency to find evidence for one’s existing views and to discount contradictory evidence—shows up in arguments about the safety of vaccination, gun control, climate change, the death penalty, taxes, immigration and a host of other public issues. Americans seem increasingly divided into two camps, each convinced of its correctness. The problem is magnified in our virtual world. Studies of Facebook find that users shared stories they accept and not those they reject, leading to the likelihood of homogeneous policy clusters and group polarization according to Cass Sunstein in the article “How Facebook Makes Us Dumber” from the Bloomberg View on January 8, 2016. There is even evidence that people increasingly want to live mostly among people who think like they do. As chronicled by Bill Bishop in “The Big Sort: Why the Clustering of Like Minded Individuals is Tearing Us Apart” in 2009, the number of House districts that are competitive politically keeps declining amidst signs that people are relocating to live near those who regularly confirm their views.

What is true outside of public organizations is true inside them too, as different specialties/functions and organizations see the world in differing ways and strongly reinforce their own views. Making and implementing evidence-based public policy in such a world is a challenge.

Why Do We Do It?

The strength of confirmation bias suggests it is powered by human psychology and physiology, as well as perhaps evolutionary biology. Confirmation bias builds emotional reassurance amidst an uncertain world. It serves as a safety valve against cognitive dissonance, those times when our beliefs are challenged by contradictory information.

As Sarah and Jack Gorman note in “Denying to the Grave: Why We Ignore the Facts That Will Save Us” in 2017, “it feels good to ‘stick to your guns.’” This releases dopamine, a brain chemical associated with pleasure and addiction. Establishing a dopamine pathway rewards us for continuing to think in the same way, which is why we enjoy “finding” confirmation for our views.

Confirmation bias is often launched in highly emotional and stressful situations. As psychologist Paul Slovic put it, “Beliefs we come to have when highly emotionally aroused are hard to dislodge,” which may explain in part why we don’t want to give up our views.

Confirmation bias builds relationships with clusters of like-minded thinkers. Those relationships bolster our sense of power and control and make us feel protected from outside threats.

Is There an Antidote?

The prevailing belief on stopping confirmation bias is to give people more information so reason will prevail. But it often does not, as the nuclear arsenal experiment demonstrated. Indeed, there is evidence that the more cognitive complexity one can handle, the greater the strength of confirmation bias: being smart makes us better at digging out more evidence to support our views.

Some more promising approaches include:

- Appeal to people’s values and emotions before their reason. They are more open to changing their minds when their core values and emotions get engaged. The environmental movement, for example, took off when people saw pictures of the earth from space. Only then were they open to scientific data.

- Diversity helps. Engage people with a wider range of alternative views. As the Gormans put it, “try to bring them into a group that is based on better data.”

- Use deliberative democracy, in which a group with diverse views on a topic is presented with a range of different ideas and evidence and then engages in thoughtful conversation. Even if agreement is not reached, this approach can moderate extreme views.

- Seek disconfirming evidence. This is tough: we don’t like questioning ourselves. But looking at contrary research, websites, articles and books can be useful. Another way: ask people who don’t agree with you what they see and why – and suspend your desire to disagree with them.

- Ask yourself: “If I am wrong, what would I expect to see?” Then, go in search of evidence for it.

Confirmation bias is human, but it is not insurmountable in the decisions humans make.

Author: Terry Newell is President of his training firm, Leadership for a Responsibility Society and is the former Dean of Faculty of the Federal Executive Institute. He can be reached at [email protected]. Additional essays on similar topics can be found on www.thinkanew.org.

Douglas

September 17, 2018 at 10:42 am

Very well thought article. I would add to your observation “The prevailing belief on stopping confirmation bias is to give people more information so reason will prevail” is that increasingly, both the source and the amount of information, primarily from mainstream media bias and parrotted by pundits, is adding fuel to the fire and away from civil 1:1 discourse.